Functional Programming With Java: Streams

Java 8 gave us the Stream API, a lazy-sequential data pipeline of functional blocks.

It isn’t implemented as a data structure or by changing its elements directly. It’s just a dumb pipe providing the scaffolding to operate on, making it really a smart pipe.

Table of Contents

Basic Concept

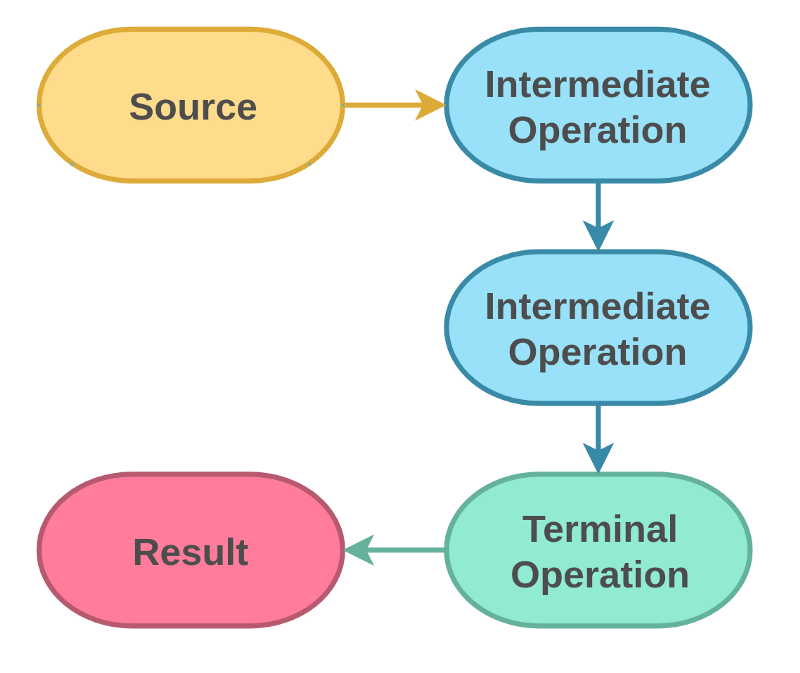

The basic concept behind streams is simple: We got a data source, perform zero or more intermediate operations, and get a result.

The parts of a stream can be separated into three groups:

- Obtaining the stream (source)

- Doing the work (intermediate operations)

- Getting a result (terminal operation)

Obtaining the stream

The first step is obtaining a stream. Many data structures of the JDK already support providing a stream:

java.util.Collection#stream()java.util.Arrays#stream(T[] array)java.nio.file.Files#list(Path dir)java.nio.file.Files#lines(Path path)

Or we can create one by using java.util.stream.Stream#of(T... values) with our values.

The class java.util.StreamSupport also provides a multitude of static methods for creating streams.

Doing the work

The java.util.Stream interface provides a lot of different operations.

Filtering

Mapping

map(Function<? super T, ? extends R> mapper)mapToInt(ToIntFunction<? super T> mapper)mapToLong(ToLongFunction<? super T> mapper)mapToDouble(ToDoubleFunction<? super T> mapper)flatMap(Function<? super T, ? extends Stream<? extends R>> mapper)flatMapToInt(Function<? super T, ? extends IntStream> mapper)flatMapToLong(Function<? super T, ? extends LongStream> mapper)flatMapToDouble(Function<? super T, ? extends DOubleStream> mapper)

Sizing/sorting

Debugging

Getting a result

Performing operations on the Stream elements is great. But at some point, we want to get a result back from our data pipeline.

Terminal operations are initiating the lazy pipeline to do the actual work and don’t return a new stream.

Aggregate to new collection/array

R collect(Collector<? super T, A, R> collector)R collect(Supplier<R> supplier, BiConsumer<R, ? super T> accumulator, BiConsumer<R, R> combiner)Object[] toArray()A[] toArray(IntFunction<A[]> generator)

Reduce to a single value

T reduce(T identity, BinaryOperator<T> accumulator)Optional<T> reduce(BinaryOperator<T> accumulator)U reduce(U identity, BiFunction<U, ? super T, U> accumulator, BinaryOperator<U> combiner)

Calculations

Optional<T> min(Comparator<? super T> comparator)Optional<T> max(Comparator<? super T> comparator)long count()

Matching

boolean allMatch(Predicate<? super T> predicate)boolean anyMatch(Predicate<? super T> predicate)boolean noneMatch(Predicate<? super T> predicate)

Finding

Consuming

Stream Characteristics

Streams aren’t just glorified loops. Sure, we can express any stream with a loop — and most loops with streams. But this doesn’t mean they’re equal or one is always better than the other.

Laziness

The most significant advantage of streams over loops is laziness. Until we call a terminal operation on a stream, no work is done. We can build up our processing pipeline over time and only run it at the exact time we want it to.

And not just the building of the pipeline is lazy. Most intermediate operations are lazy, too. Elements are only consumed as they’re needed.

(Mostly) stateless

One of the main pillars of functional programming is an immutable state. Most intermediate operations are stateless, except for distinct(), sorted(...), limit(...), and skip(...).

Even though Java allows the building of stateful lambdas, we should always strive to design them to be stateless. Any state can have severe impacts on safety and performance and might introduce unintended side effects.

Optimizations included

Thanks to being (mostly) stateless, streams can optimize themselves quite efficiently. Stateless intermediate operations can be fused together to a combined consumer. Redundant operations might be removed. And some pipeline paths might be short-circuited.

The JVM will optimize traditional loops, too. But streams are an easier target due to their multioperation design and are mostly stateless.

Non-reusable

Being just a dumb pipeline, streams can’t be reused. But they don’t change the original data source — we can always create another stream from the source.

Less boilerplate

Streams are often easier to read and comprehend.

This is a simple data-processing example with a for loop:

List<Album> albums = ...;

List<String> result = new ArrayList<>();

for (Album album : albums) {

if (album.getYear() != 1999) {

continue;

}

if (album.getGenre() != Genre.ALTERNATIVE) {

continue;

}

result.add(album.getName());

if (result.size() == 5) {

break

}

}

Collections.sort(result);This code is equivalent to:

List<String> result =

albums.stream()

.filter(album -> album.getYear == 1999)

.filter(album -> album.getGenre() == Genre.ALTERNATIVE)

.limit(5)

.map(Album::getName)

.sorted()

.collect(Collectors.toList());We have a shorter code block, clearer operations, no loop boilerplate, and no extra temporary variables. All nicely packaged in a fluent API. This way, our code reflects the what, and we no longer need to care about the actual iteration process, the how.

Easy parallelization

Concurrency is hard to do right and easy to do wrong.

Streams support parallel execution (forkJoin) and remove much of the overhead if we’re doing it ourselves.

A stream can be parallelized by calling the intermediate operation parallel() and turned back to sequential by calling sequential().

But not every stream pipeline is a good match for parallel processing.

The source must be big enough and the operations costly enough to justify the overhead of multiple threads. Context switches are expensive. We shouldn’t parallelize a stream just because we can.

Primitive handling

Just like with functional interfaces, streams have specialized classes for dealing with primitives to avoid autoboxing/unboxing:

Best Practices and Caveats

Smaller operations

Lambdas can be simple one-liners or huge code blocks if wrapped in curly braces. To retain simplicity and conciseness, we should restrict ourselves to these two use cases for operations:

- One-line expressions

e.g.,.filter(album -> album.getYear() > 4) - Method references

e.g.,filter(this::myFilterCriteria)

By using method references, we can have more complex operations, reuse operational logic, and even unit test it more easily.

Method references

Not only simplicity and conciseness are affected by using method references. There are also implications on the bytecode level.

The bytecode between a lambda and a method reference differs slightly — with the method reference generating less. A lambda might be translated into an anonymous class calling the body, creating more code than needed.

Also, by using method references, we lose the visual noise of the lambda:

source.stream()

.map(s -> s.length())

.collect(Collectors.toList());

// VS.

source.stream()

.map(String::length)

.collect(Collectors.toList());Cast and Type Checks

Don’t forget that Class<T> is an object, too, providing many helpful methods:

source.stream()

.filter(String.class::isInstance)

.map(String.class::cast)

.collect(Collectors.toList());Return a value or check for null

Intermediate operations should either return a value or handle null in the next operation.

Adding a simple .filter(Objects::nonNull) might be enough to ensure no NPEs.

Code formatting

By putting each pipeline step into a new line, we can improve readability:

List<String> result = albums.stream().filter(album -> album.getReleaseYear == 1999)

.filter(album -> album.getGenre() == Genre.ALTERNATIVE).limit(5)

.map(Album::getName).sorted().collect(Collectors.toList());

// VS.

List<String> result =

albums.stream()

.filter(album -> album.getReleaseYear == 1999)

.filter(album -> album.getGenre() == Genre.ALTERNATIVE)

.limit(5)

.map(Album::getName)

.sorted()

.collect(Collectors.toList());It also allows us to set breakpoints at the correct pipeline step easier.

Not every Iteration is a stream

As written before, we shouldn’t replace every loop. Just because it iterates, doesn’t make it a valid target for stream-based processing. Often a traditional loop might be a better choice than using forEach(...) on a stream.

Effectively final

We can access variables outside of intermediate operations, as long as they are in scope and effectively final.

This means it’s not allowed to change after initialization.

But doesn’t need an explicit final modifier.

And by just re-assigning to a new variable we can make it effectively final:

String nonFinal = null;

nonFinal = "changed value makes it non-final";

String thisIsEffectivelyFinalAgain = nonFinal;Sometimes this restriction seems cumbersome, and we can change the state of effectively final objects, as long as the variable is final. But doing so undermines the concept of immutability and introduces unintended side effects.

Checked Exceptions

Streams and Exceptions are a subject that warrants their own article(s), but I’ll try to summarize them.

This code won’t compile:

List<Class> classes =

Stream.of("java.lang.Object",

"java.lang.Integer",

"java.lang.String")

.map(Class::forName)

.collect(Collectors.toList());The method Class.forName(String className) throws a checked exception, a ClassNotFoundException, and requires a try-catch, making the code unbearable to read:

List<Class> classes =

Stream.of("java.lang.Object",

"java.lang.Integer",

"java.lang.String")

.map(className -> {

try {

return Class.forName(className);

}

catch (ClassNotFoundException e) {

// Ignore error

return null;

}

})

// additional filter step required, to deal with null

.filter(Objects::nonNull)

.collect(Collectors.toList());By refactoring the className conversion to a dedicated method, we can retail the simplicity of the stream:

Class toClass(String className) {

try {

return Class.forName(className);

}

catch (ClassNotFoundException e) {

return null;

}

}

List<Class> classes =

Stream.of("java.lang.Object",

"java.lang.Integer",

"java.lang.String")

.map(this::toClass)

.filter(Objects::nonNull)

.collect(Collectors.toList());We still need to handle possible null values, but the checked exception isn’t visible in the stream code.

Another solution for dealing with checked exceptions is wrapping the intermediate operations in consumers/functions etc. that catch the checked exceptions and rethrowing them as unchecked. But, in my opinion, that’s more like an ugly hack than a valid solution.

If an operation throws a checked exception, we should refactor it to a method and handle its exception accordingly.

Unchecked Exceptions

Even if we handle all checked exceptions, our streams can still blow up thanks to unchecked exceptions.

There’s not a one-size-fits-all solution for preventing exceptions, just as there’s not in any other code. Developer discipline can greatly reduce the risk. Use small, well-defined operations with enough checks and validation. This way we can at least minimize the risk.

Debugging

Streams can be debugged as any other fluent call. If we have a single operation in a line, a breakpoint will stop accordingly. But the creation of anonymous classes for lambdas can result in a really confusing stack trace.

During development, we could also utilize the intermediate operation peek(Consumer<? super T> action) to intercept an element.

The operation is mainly for debugging purposes and shouldn’t be used in the stream’s final form.

IntelliJ provides a visual debugger.

Order of operations

Think of a simple stream:

Stream.of("ananas", "oranges", "apple", "pear", "banana")

.map(String::toUpperCase) // 1. Process

.sorted(String::compareTo) // 2. Sort

.filter(s -> s.startsWith("A")) // 3. Filter

.forEach(System.out::println);This code will run map five times, sorted eight times, filter five times, and forEach two times. This means a total of 20 operations to output two values.

If we reorder the pipeline parts, we can reduce the total operations count significantly without changing the actual outcome:

Stream.of("ananas", "oranges", "apple", "pear", "banana")

.filter(s -> s.startsWith("a")) // 1. Filter first

.map(String::toUpperCase) // 2. Process

.sorted(String::compareTo) // 3. Sort

.forEach(System.out::println);By filtering first, we’re going to restrict the other operations to a minimum: filter five times, map two times, sort one time, and forEach two times, which saves us 10 operations in total.

Java 9 Enhancements

Java 9 brought four additions to streams:

dropWhile(Predicate<? super T> predicate)

Drops elements until the firstfalsepredicate is encountered.takeWhile(Predicate<? super T> predicate)

Takes elements until the firstfalsepredicate is encountered.Stream<T> ofNullable(T t)` Returns a single-element stream if the nullable is not empty — otherwise, an empty stream.iterate(T seed, Predicate<? super T> hasNext, UnaryOperator<T> next)

Generates a finite stream, an equivalent of aforloop, likeStream.iterate(0, i -> i < 10, i -> i + 1).

Resources

- Stream package documentation for Java SE 8 (Oracle)

- The Java 8 Stream API Tutorial (Baeldung)