How Fast Are Streams Really?

A Realistic Look at Performance and When It Matters

Java Streams have significantly impacted how we process data since their release in 2014. The fluent and declarative API offers undeniable advantages in readability, conciseness, and safety, especially for complex transformations or large datasets.

But what about the bread-and-butter tasks?

Table of Contents

Filtering, mapping, reducing, grouping… Streams provide elegant solutions where traditional loops are usually quite verbose, harder to follow, or more complicated to implement safely.

We don’t always need complex transformations on humongous datasets; we often iterate over small collections simply to find a specific element or perform a basic transformation.

In such scenarios, where the more sophisticated Stream features aren’t necessarily needed, some fundamental questions arise:

What is the real-world performance trade-off when choosing between Streams and traditional loops?

Does the elegance of Streams come at a performance cost we need to worry about?

Are Streams fast enough?

We’ll examine Java Streams versus traditional loops using JMH benchmarks across different dataset sizes and operations. This data-driven comparison will show when Stream overhead impacts performance and when it becomes negligible.

Understanding Streams: Ballet of a Behemoth

Streams have a certain elegance, representing complex transformations as pipelines. Features like lambdas and method references introduced a completely new way of approaching data processing right there in the JDK. They are one of my favorite features, and they can do great things more sensibly and concisely than before.

But let’s be real: Streams aren’t magic.

They are sophisticated abstractions built upon concepts like Spliterator and lazy vertical pipeline evaluation and utilize many of the features introduced since Java 8.

Compared to other data processing approaches, like LINQ in .NET, they’re not as deeply integrated and have no special keywords, etc. That doesn’t mean they’re a simple library-level feature, either.

The JVM does its best to optimize many of the aspects Streams use. There are things like escape analysis, loop fusion, or even detecting specific patterns that the JIT compiler knows how to optimize, but the abstraction itself isn’t free.

No matter where we stand on Streams, it makes sense to start from how they are built and work, at least at a higher level, to judge and better understand potential overhead.

Getting Started: Stream Creation

Each Stream starts with a source, typically via a Spliterator.

The simplest way is calling a Collection#stream() variant:

List<String> names = List.of("John", "Jane", "Fred", "Wilma");

Stream<String> stream = names.stream();There are many different options to create streams, but let’s take a closer look at stream().

Under the hood, stream() usually calls spliterator() on the collection and then passes that to StreamSupport.stream(...).

This involves creating at least a Spliterator object and a Stream head object (often a ReferencePipeline.Head).

While highly optimized, especially for common collection types with specialized Spliterators, this initial setup has a non-zero cost compared to simply initializing a loop counter.

Building a Pipeline

Each intermediate operation like map or filter we call on the Stream adds a new stage to the pipeline.

A new a ReferencePipeline.StatelessOp or ReferencePipeline.StatefulOp (which implement Stream) gets created and linked to the previous stage.

There are more details to it, especially with different Stream variants and operations.

While the JVM is adept at optimizing any lambdas involved, the pipeline structure itself involves object creation and method calls that aren’t present in a simple loop with a few if statements.

Generics Vs Primitives

Autoboxing, the conversion between primitives (like int) and their wrapper types (Integer) – carries a performance cost regardless of the used iteration method.

Converting between primitives and their Object wrapper types isn’t free at all, even if it’s hidden quite well in Java.

Primitive Stream variants like IntStream, LongStream, and DoubleStream are designed to avoid this overhead by operating directly on primitives.

We’ll see later how this plays out in benchmarks.

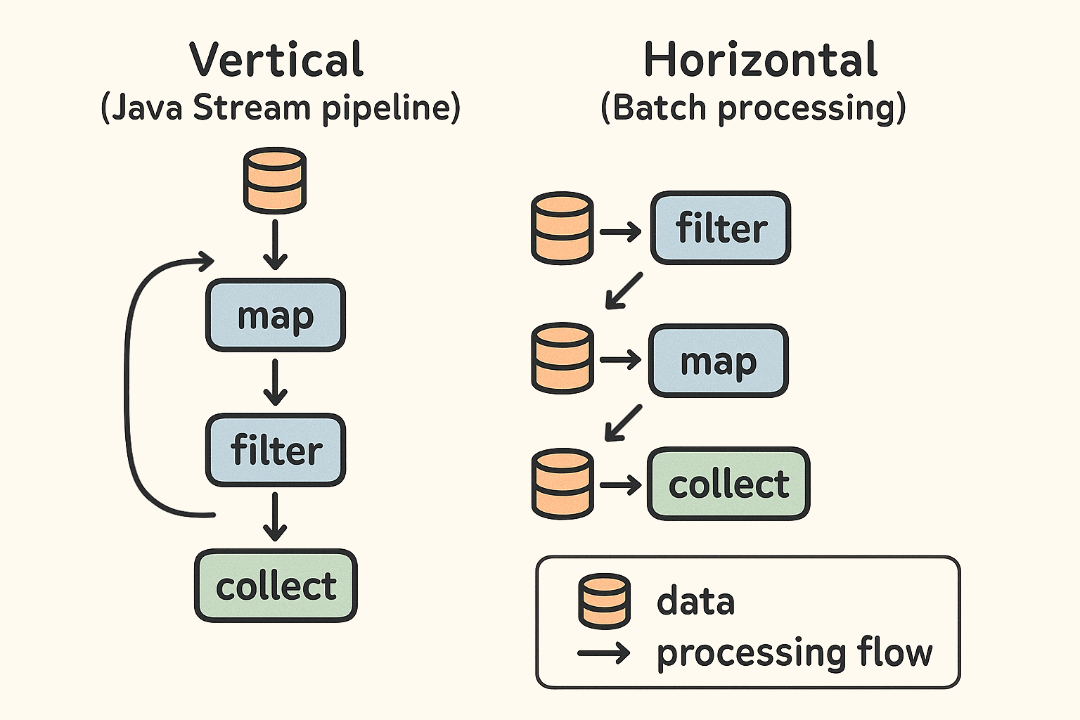

Laziness and Vertical Processing

Streams are lazy.

Data is pulled vertically through the pipeline, meaning that any element traverses the whole pipeline (or until filtered out) before the next element starts its journey.

As before, it’s a little bit more complicated, especially in parallel scenarios, but this simplification is sufficient for illustration purposes.

This laziness and verticality is often a performance advantage, as we don’t need to transform 10,000 elements before filtering further and finally take the first one we encounter: only the actual relevant elements defined by the pipeline operations and their order are processed.

However, such pull-based iteration, where the terminal operation triggers processing and requesting elements that ripple back through the pipeline, inherently involves a more complex control flow and state management compared to a for loop.

The Concept of Cost Amortization

So far, it doesn’t look good performance-wise for Streams… a lot of moving parts, pipeline setup with multiple method calls, complex Spliterators, additional Object creation, etc.

Still, we must acknowledge that much of the overhead (pipeline setup, initial object creation) is a fixed cost or scales with the complexity of the pipeline, not directly with the size of the processed dataset.

Processing large datasets with thousands or more elements, this fixed cost is amortized quickly as it’s spread across so many elements. The actual work done per element starts to dominate the execution time, and the initial setup becomes negligible.

It’s not about labeling Streams “slow” for small datasets, but recognizing that the powerful abstraction doesn’t come for free.

But what is the actual cost? Let’s measure.

Getting Reliable Numbers

Many people, including myself (in the past), measure performance by simply gathering and interpreting time between invocations with a simple test case and logging the difference of System.nanoTime().

Seems simple enough, and the numbers appear to make sense in many cases.

However, this is a dangerously unreliable approach when dealing with modern Java performance.

Measuring the performance of code running on the JVM is notoriously tricky, especially for “microbenchmarks” of code that executes quickly in an application with a very short lifecycle. The real power of the JVM lies in its highly dynamic environment and its highly sophisticated optimizations at runtime, thanks to multi-tiered JIT compilation and decompilation.

That’s why the naive timing of methods is an inadequate approach to getting hard numbers.

Enter JMH

JMH (Java Microbenchmark Harness), is the de facto standard for correctly building, running, and analyzing Java microbenchmarks.

Developed by JVM engineers, it tackles pitfalls like:

Controlled Execution:

Isolated JVM processes for clean runs not affecting each other.Preheating the Oven:

Runs the code before measuring it, giving the JIT a chance to kick in beforehand.Measure Twice, Cut Once:

Runs multiple iterations and analyzes results statistically.It’s Dead, Jim:

ABlackholeinstance ensures the tested code isn’t optimized away entirely.State of Affairs:

Setting up the benchmark data itself won’t interfere with the measured code execution.

Let’s Take an (Educated) Guess First

One crucial point about benchmarking is to confirm our general assumptions of code or constructs, right? Especially for things like “best practices,” which we often assume without an actual base to underlay our beliefs.

Well, calling my blog “belief-driven design” wasn’t an accident.

We, as developers, way too often are cargo-culting our way through our code bases. Maybe with the best intentions, maybe because of a knowledge gap of alternative ideas and approaches, or just simple navel-gazing.

The more we learn about a technology and the more experience we gain, the easier it gets to make an educated guess about many things. But it’s also easier to get stuck in ways of thinking. It still remains a guess until actually verified.

Nevertheless, that’s why we definitely need to get our expectations shattered sometimes, to stop making assumptions without a concrete base to support them, and broaden our horizons to new approaches.

My personal guess was that Streams are significantly slower for small datasets, which even led me to write a leaner alternative for lazily and vertically processing Collection-based types before I benchmarked my assumptions.

So let’s shatter some assumptions and speculation and replace them with hard facts!

Benchmark Setup

First, we need to set up JMH, in my case, for Gradle.

I prefer having it in its own source set under src/jmh/java to keep it separated from tests and actual code.

Here are the relevant snippets from build.gradle

sourceSets {

jmh {

java.srcDirs = ['src/jmh/java']

resources.srcDirs = ['src/jmh/resources']

// Optional: Extend classpath if benchmarks need main/test code

// compileClasspath += sourceSets.main.output + configurations.runtimeClasspath

// runtimeClasspath += sourceSets.main.output + configurations.runtimeClasspath

}

}

dependencies {

jmhImplementation 'org.openjdk.jmh:jmh-core:1.37'

jmhAnnotationProcessor 'org.openjdk.jmh:jmh-generator-annprocess:1.37'

}

tasks.register('jmh', JavaExec) {

group = 'Benchmark'

description = 'Run JMH benchmarks in the jmh source set.'

classpath = sourceSets.jmh.runtimeClasspath

mainClass = 'org.openjdk.jmh.Main'

// Optional: Add JMH CLI arguments here

args = []

}That’s all it takes to run any benchmarks under src/jmh/java with a simple ./gradlew jmh

Now that we have everything up and running, it’s time to create some benchmarks.

Choosing a Battleground

To make meaningful comparisons, we need concrete scenarios:

Datasets (# of elements)

- Tiniest: 1

- Tiny: 2

- Small: 20

- Medium: 500

- Large: 10'000

Processing challenges:

- Simple: Filter/Map/Reduce (like a summation)

- Complex: Filter/Map/Stateful Ops/Reduce

Benchmark Scaffold

Similar to the usual unit testing frameworks, benchmarks are controlled by a myriad of annotations.

Take this empty scaffold for testing the previously chosen datasets in the form of List<UUID> for example:

package benchmarks.streams;

import java.util.*;

import java.util.concurrent.TimeUnit;

import org.openjdk.jmh.annotations.*;

import org.openjdk.jmh.infra.Blackhole;

@State(Scope.Benchmark)

@BenchmarkMode(Mode.AverageTime)

@OutputTimeUnit(TimeUnit.NANOSECONDS)

@Warmup(iterations = 3, time = 1, timeUnit = TimeUnit.SECONDS)

@Measurement(iterations = 3, time = 1, timeUnit = TimeUnit.SECONDS)

public class MyLittleBenchmark {

@Param({ "1", "2", "20", "500", "10000" })

private int size;

private List<UUID> data;

@Setup(Level.Trial)

public void setup() {

// Fixed seed for reproducibility

Random random = new Random(12345);

this.data = new ArrayList<>(this.size);

for (int i = 0; i < this.size; i++) {

this.data.add(new UUID(random.nextLong(), random.nextLong()));

}

}

// Benchmark methods go here...

}Here’s a list and explanation for the different annotations:

@State(Scope.Benchmark)

Declares the class holds state (data) for the actual benchmark.@BenchmarkMode(Mode.AverageTime)

Tells JMH to measure the average execution time. There’s alsoThroughput,SampleTime, andSingleShotTimeavailable.@OutputTimeUnit(TimeUnit.NANOSECONDS)

Sets the output time format.@Warmup(...)

Before actual measurements begin, JMH will run the benchmark with the provided arguments to give the JVM a chance to optimize/JIT compile the code. This creates a more realistic runtime environment, but also prolongs the overall benchmark duration.@Measurement(...)

After getting the JVM warm and ready, JMH runs the benchmark as configured here. In this case, any benchmark method is run 3 times for ~1,000ms iterations to ensure reliable and statistically sound results.@Param(...)

A parameter used for running the benchmarks. JMH will run the entire benchmark suite (warmup and measurement) multiple times, once for each value.@Setup(Level.Trial)

Marks a method for setting up the benchmarks. In this case, to be run before each trial, meaning before all warmup and measurement iterations for a specific parameter.

In short, this JMH setup is to repeatedly measure how long the benchmark methods take to run on different datasets.

It’s time to write an actual benchmark!

Creating Some Benchmarks

Let’s implement a simple task: filter the UUID instances based on hashCode(), extract a part of their string representation.

It’s kind of nonsense, but it represents dealing with a data type by filtering and transforming it:

@Benchmark

public void forLoop(Blackhole b) {

List<String> result = new ArrayList<>();

for (var uuid : this.data) {

// FILTER

if (uuid.hashCode() % 7 == 0) {

continue;

}

// TRANSFORMATION (3x)

String uuidStr = uuid.toString();

String[] parts = uuidStr.split("-");

String thirdPart = parts[2];

// GATHER RESULTS

result.add(thirdPart);

}

// Prevent dead code elimination

b.consume(result);

}Like a unit test marked with @Test, the @Benchmark annotation marks a benchmark method which must be public.

A Stream version would look like this:

@Benchmark

void stream(Blackhole b) {

List<String> result =

this.data.stream()

// FILTER

.filter(uuid -> uuid.hashCode() % 7 != 0)

// TRANSFORMATION (3x)

.map(Object::toString)

.map(str -> str.split("-"))

.map(parts -> parts[2])

// GATHER RESULTS

.toList();

b.consume(result);

}It’s time to run the two benchmarks with ./gradlew jmh.

Interpreting Results

After some time, I got the following results on my machine running Temurin 23.0.2 on Ubuntu 24.04.2 (6.11.0–24) with a Ryzen 5 7600X and 64 GB RAM:

I’ve reordered, cleaned up and commented the entries to be easier to compare. However, make sure to read the upcoming note about error margins to better understand how to interpret the numbers.

Benchmark (size) Mode Cnt Score Error Units

forLoop 1 avgt 15 68.6 ± 0.8 ns/op

stream 1 avgt 15 125.6 ± 1.8 ns/op // ~1.8x

forLoop 2 avgt 15 131.6 ± 0.8 ns/op

stream 2 avgt 15 188.5 ± 8.8 ns/op // ~1.4x

forLoop 20 avgt 15 1'194.8 ± 9.9 ns/op

stream 20 avgt 15 1'299.3 ± 14.5 ns/op // ~1.1x

forLoop 500 avgt 15 31'076.3 ± 342.3 ns/op

stream 500 avgt 15 31'030.7 ± 286.7 ns/op // ~1.0x

forLoop 10'000 avgt 15 588'531.9 ± 14'567.4 ns/op

stream 10'000 avgt 15 630'282.9 ± 8'089.5 ns/op // ~1.1xAs expected, there’s a gap, but it’s closing quickly.

For small input sizes, a traditional for loop is measurably faster than an equivalent Stream implementation and wins on raw power.

This is primarily due to the inherent overhead of setting up and executing the Stream pipeline.

However, the performance gap between the loop and the Stream narrows as the input size increases.

This suggests that the overhead of the Stream setup is relatively fixed, while the per-element processing cost is similar for both approaches.

The error margins are relatively small compared to the scores, indicating consistent measurements.

Understanding Error Margins

“± 0.8” represents an error margin (or confidence interval). In JMH, this typically indicates a 99.9% confidence that the true average falls within this range. A small error margin relative to the score suggests consistent, reliable measurements, while a large one indicates volatile performance between the runs.When comparing benchmark results, overlapping error margins mean there’s no statistically significant difference between approaches. Only when error margins don’t overlap can we confidently declare one approach faster than another.

For a single element, the for loop wins on raw speed, but both approaches have very large error margins relative to their score.

That’s a typical problem with extremely short-running benchmarks, as external factors like JIT instability and OS jitter affect the results more than compared to longer running ones.

For two elements, the loop still clearly wins again, even with its still quite high error margin.

At 20 elements, the performance is now really close, with a slight edge for loops.

The first faster Stream is at 500 elements, although the error margins make it a statistical tie instead of a win.

The most interesting result is at 10,000 elements, where the Stream performs slower with a smaller error margin.

So what does that mean for us choosing between the two approaches?

Drawing Conclusions

Based on the specific results for the specific benchmark it seems that up to 20 elements, the for loop is clearly the winner on raw performance.

The more elements we process, the smaller the gap gets. This is consistent with typical Stream overhead, which amortizes over time.

However, the funny thing happens at 10,000 elements, where the average time of the Stream is clearly slower than before.

This performance degradation is most likely due to allocating too many intermediate Objects and the accompanied Garbage collector pressure.

Also, while often minimized by the JIT, method call overhead accumulates with more elements in the Stream.

Maybe we can improve the results by improving the benchmarked code?

Improving the Benchmark

Can we make the Stream faster by optimizing allocation patterns and method calls?

The first one, allocation patterns are relevant to Streams as there’s the possibility that more intermediate objects need to be created, for example for passing them through pipeline stages, etc.

At 10,000 elements, even minor differences in allocations can lead to Garbage Collection pressure, which gives the for loop an edge.

The second one can be reduced by using fewer intermediate operations.

Let’s reduce the number of String moving through the pipeline by combining the map operations:

@Benchmark

public void streamImproved(Blackhole b) {

List<String> result =

this.data.stream()

.filter(uuid -> uuid.hashCode() % 7 != 0)

// COMBINE TRANSFORMATION OPS

.map(uuid -> uuid.toString().split("-")[2])

.toList();

b.consume(result);

}And here are the results:

Benchmark (size) Mode Cnt Score Error Units

forLoop 10'000 avgt 15 588'531.9 ± 14'567.4 ns/op

stream 10'000 avgt 15 630'282.9 ± 8'089.5 ns/op // ~1.1x

streamImproved 10'000 avgt 15 575'398.2 ± 9'032.4 ns/op // ~0.9xThe improved variant performs better than the other Stream variant, as they share a similar error margin.

There’s no clear winner here… However, allocating many String instances is always costly, so how about another data type to test Streams with?

Let’s Try Primitives

Let’s try again with another data type: List<Integer> but also int[] to see the overhead of auto-boxing:

package blog;

import java.util.*;

import java.util.concurrent.TimeUnit;

import java.util.random.RandomGenerator;

import org.openjdk.jmh.annotations.*;

import org.openjdk.jmh.infra.Blackhole;

@State(Scope.Benchmark)

@BenchmarkMode(Mode.AverageTime)

@OutputTimeUnit(TimeUnit.NANOSECONDS)

@Warmup(iterations = 3, time = 1, timeUnit = TimeUnit.SECONDS)

@Measurement(iterations = 3, time = 1, timeUnit = TimeUnit.SECONDS)

public class MyLittleIntBenchmark {

@Param({ "1", "2", "20", "500", "10000" })

private int size;

private List<Integer> data;

private int[] primitiveData;

@Setup(Level.Trial)

public void setup() {

// Fixed seed for reproducibility

this.data = new Random(12345).ints().limit(this.size).boxed().toList();

this.primitiveData = this.data.stream().mapToInt(Integer::intValue).toArray();

}

@Benchmark

public void forLoopPrimitives(Blackhole b) {

List<Integer> result = new ArrayList<>();

for (var val : this.primitiveData) {

// FILTER

if (val % 7 == 0) {

continue;

}

// TRANSFORM

val = val * 13;

// FILTER

if (val % 42 == 0) {

continue;

}

// TRANSFORM

val /= 23;

// GATHER RESULTS

result.add(val);

}

b.consume(result);

}

@Benchmark

public void forLoopBoxed(Blackhole b) {

List<Integer> result = new ArrayList<>();

for (var val : this.data) {

// FILTER

if (val % 7 == 0) {

continue;

}

// TRANSFORM

val = val * 13;

// FILTER

if (val % 42 == 0) {

continue;

}

// TRANSFORM

val /= 23;

// GATHER RESULTS

result.add(val);

}

b.consume(result);

}

@Benchmark

public void streamBoxed(Blackhole b) {

List<Integer> result =

this.data.stream()

// FILTER

.filter(val -> val % 7 != 0)

// TRANSFORM

.map(val -> val * 13)

// FILTER

.filter(val -> val % 42 != 0)

// TRANSFORM

.map(val -> val / 23)

// GATHER RESULTS

.toList();

b.consume(result);

}

@Benchmark

public void streamPrimitive(Blackhole b) {

int[] result =

Arrays.stream(this.primitiveData)

// FILTER

.filter(val -> val % 7 != 0)

// TRANSFORM

.map(val -> val * 13)

.filter(val -> val % 42 != 0)

// FILTER

// TRANSFORM

.map(val -> val / 23)

// GATHER RESULTS

.toArray();

b.consume(result);

}

}This time, the hopefully improved version is already included.

The “improvement” is using an IntStream instead of a boxed Stream<Integer> and only converting it back in the penultimate operation.

Finally, the results match more with the assumption of more elements and the right data type is being quite beneficial for Streams:

Benchmark (size) Mode Cnt Score Error Units

forLoopBoxed 1 avgt 15 6.6 ± 0.1 ns/op

streamBoxed 1 avgt 15 48.5 ± 0.8 ns/op // ~7.3x

forLoopPrimitives 1 avgt 15 6.9 ± 0.1 ns/op

streamPrimitive 1 avgt 15 41.3 ± 0.3 ns/op // ~6.0x

forLoopBoxed 2 avgt 15 10.6 ± 0.2 ns/op

streamBoxed 2 avgt 15 55.2 ± 3.3 ns/op // ~5.2x

forLoopPrimitives 2 avgt 15 10.2 ± 0.1 ns/op

streamPrimitive 2 avgt 15 43.9 ± 0.3 ns/op // ~4.3x

forLoopBoxed 20 avgt 15 71.6 ± 5.0 ns/op

streamBoxed 20 avgt 15 141.3 ± 1.6 ns/op // ~2.0x

forLoopPrimitives 20 avgt 15 65.3 ± 0.8 ns/op

streamPrimitive 20 avgt 15 104.4 ± 0.7 ns/op // ~1.6x

forLoopBoxed 500 avgt 15 2'371.4 ± 39.4 ns/op

streamBoxed 500 avgt 15 3'196.8 ± 25.5 ns/op // ~1.3x

forLoopPrimitives 500 avgt 15 1'977.1 ± 10.8 ns/op

streamPrimitive 500 avgt 15 1'915.7 ± 12.6 ns/op // ~0.97x

forLoopBoxed 10'000 avgt 15 40'487.0 ± 1713.1 ns/op

streamBoxed 10'000 avgt 15 68'126.6 ± 1250.6 ns/op // ~1.7x

forLoopPrimitives 10'000 avgt 15 34'730.2 ± 2168.6 ns/op

streamPrimitive 10'000 avgt 15 43'282.1 ± 660.8 ns/op // ~1.2xInterpreting Results (again)

The results give us a fascinating and perhaps more intuitive picture of the performance trade-offs between loops and Streams.

One obvious thing, though, is how primitives destroy boxed processing, regardless of the approach, if there’s more than just a few elements.

At small data sizes (1-2 elements), for outperform Stream by 6-7x due to pipeline setup overhead.

With 20 elements, `for`` is still 1.6-2.2x, though the gap narrows. Primitive implementations begin showing advantages over boxed versions.

At 500 elements, IntStream performance matches primitive for.

This suggests that the pipeline setup costs have been amortized.

Both boxed implementations lag significantly behind their primitive counterparts.

Interestingly, when scaling up to 10'000 elements, primitive for regains its lead (~25% faster than IntStream), similar to what we saw with the UUID benchmark.

While IntStream still comfortably outperforms both the boxed loop and the much slower boxed Stream.

This suggests that for very simple operations repeated many times, the minimal overhead associated with the Stream’s internal dispatch or potential differences in cache utilization might become slightly more noticeable compared to the extremely tight structure of a basic for loop.

This is a good reminder that intuitions about performance often need validation through actual measurement.

What About Parallel Streams?

Just to have the full picture, we should check out a parallel variant, too.

As the UUID example performed worse with 10'000 elements, it’s a better candidate:

@Benchmark

public void simpleStreamParallel(Blackhole b) {

List<String> results = this.data

.parallelStream()

.filter(uuid -> uuid.hashCode() % 7 != 0)

.map(uuid -> uuid.toString().split("-")[2])

.toList();

b.consume(results);

}The results are as expected, clearly favoring parallelism:

Benchmark (size) Mode Cnt Score Error Units

forLoop 10'000 avgt 15 588'531.9 ± 14'567.4 ns/op

stream 10'000 avgt 15 630'282.9 ± 8'089.5 ns/op // ~1.1x

streamImproved 10'000 avgt 15 575'398.2 ± 9'032.4 ns/op // ~0.98x

streamParallel 10'000 avgt 15 145'725.9 ± 733.4 ns/op // ~0.3xThe improved Stream variant already was close to the for loop, but the parallel variant blows them both out of the water being ~4 times faster!

To be fair, the overall task works well in parallel with large number of elements, as the filter step comes first, and the map operation is a pure function, too.

But remember: Parallelism isn’t free, either!

For very small datasets or tasks with high coordination overhead, it’s usually slower. In our case, with 20 elements, the parallel approach was still almost 3 times slower than the improved variant.

Another good reason to benchmark our code.

Beyond Chasing Nanoseconds

We’ve seen a lot of benchmark results, but what should we learn from them?

Does a for loop always outperform a Stream?

Long story short: it depends on our end goal.

If we’re looking for raw processing performance, a well-crafted for loop will often beat a Stream.

Especially for a small amount of elements, the overhead inherent in setting up and executing the pipeline, usually negligible for complex tasks, quickly becomes quite significant when the work done per element is minimal.

We even saw with the cheaper integer manipulations that Streams remained slower, even for larger datasets.

Does this mean we should abandon Streams and revert solely to imperative loops?

Absolutely not!

While the benchmark results highlight that Streams aren’t a silver bullet for all performance challenges, their overall value extends far beyond pure execution speed.

Here’s some crucial context about execution speed:

While a loop might save you tens or hundreds of nanoseconds per operation in these isolated tests, real-world applications operate on a vastly different timescale:

- A typical database query might take 1 to 50+ milliseconds… that’s 1,000,000 to 50'000'000 nanoseconds!

- A _network API call could easily take 50 to 500+ milliseconds.

- …

Optimizing a loop to save 500 nanoseconds when the surrounding operation takes 50 milliseconds (50'000'000 ns) represents a performance gain of a whooping 0.001%.

Chasing nanoseconds is a fun pastime, and I’m guilty of doing it, too. But such micro-optimizations are frequently irrelevant noise compared to addressing the real bottlenecks like inefficient queries, excessive I/O, or poor caching.

That’s why I often prefer Streams over loops, even if I know they might be “slower”. Streams give us the power to express complex data processing as declarative pipelines that read closer to the problem descriptions, which improves readability and reduces boilerplate compared to most loops. This approach typically results in more maintainable, concise code that clearly communicates intent while offering a simple gateway to parallel execution.

Converting the UUID example to process the data in parallel would’ve been way more complex than simply calling parallel() on the Stream.

Though parallel Streams don’t always yield performance improvements by default, as with the sequential Streams, it depends on the type and order of operations.

However, the most critical lesson reinforced by our experiments is the absolute necessity of measurement. Generalizations and “gut feelings” about performance can be dangerous.

As we observed:

- Workload matters

- Data size matters

- Implementation details matter

- Execution environment matters

This doesn’t mean Stream performance never matters. When performance is critical for a specific code path, there is no substitute for benchmarking the actual code, with representative data, in the target environment.

Tools like JMH are invaluable for obtaining reliable results.

Trust the benchmark, not just the hunch.

Making The Right Decision: Balancing Performance and Productivity

So how do we choose between Streams and loops?

So, how do we choose wisely? Here are some practical guidelines based on our findings and the broader context.

Consider Streams When

Readability is paramount:

The declarative style often wins for clarity, especially in complex multi-step transformations.Working with medium to large datasets:

The overhead gets amortized as collection size grows. But be aware of allocation patterns!Parallelism is needed:

parallelStream()makes parallel processing trivially accessible. However, there are still many pitfalls to maximizing parallel Stream performance.Code evolution is a concern:

Stream pipelines often require less modification when requirements change.

Consider Loops When

Maximum performance is critical:

For tiny collections (under 20 elements) or in tight places, nothing beats a well-crafted loop. Such tight places should be identified by profiling, though, not your hunch.Processing primitives:

If boxing/unboxing overhead must be absolutely minimized.Simple operations:

When you’re just iterating once with minimal logic.State needs to be shared:

While possible with Streams (but discouraged), managing shared mutable state is often simpler and clearer within a traditional loop. If possible, aim for immutability regardless of your approach.

The Real Lesson: Measure What Matters

Streams offer tremendous advantages in modern Java development. Their performance is generally excellent and often statistically indistinguishable from loops for many common scenarios, especially once dataset sizes grow beyond a handful of elements.

However, loops can be faster, particularly with primitives or smaller datasets.

The key is to understand when this difference might occur and, more importantly, whether it matters in the context of our overall application performance.

Often, the clarity and maintainability gains from Streams justify their use, even if a loop might be marginally faster in a microbenchmark. But only through measurement can we truly understand the trade-offs and make the optimal decision.

Remember: premature optimization is the root of all evil.

Write clear, maintainable code first.

Focus on the bigger picture.

Then measure.

Optimize where it has a real-impact.

That’s the real lesson behind all those nanoseconds we’ve been chasing way too often.