Taking Out the Trash in Java

An Overview of JVM Garbage Collection

Memory management is a critical aspect of any programming language. It ensures that applications efficiently use the available resources without leaking memory or crashing.

Traditionally, languages like C and C++ required manual memory allocation and deallocation, which can easily be done wrong, introducing potential risks like dangling pointers and double-free.

Java’s automatic memory management is one of its most notable and appealing features, immensely simplifying development. Instead of allocating and freeing memory manually, it’s managed by a process called garbage collection (GC). However, it’s crucial to understand how GC works under the hood, as it’s not free and can impact overall performance in various ways.

While the JVM executes bytecode, memory management happens behind the scenes. The JVM ensures that objects are created and disposed of properly without requiring us to deallocate them explicitly. Responsible for this is the garbage collector (GC), which identifies no longer used objects and frees memory.

This article explores the concept of garbage collection, the different garbage collectors available in the JVM, how other languages manage their memory, and finally, some practical tips for tuning and debugging GC in Java.

Table of Contents

Please note that I’m not an expert in GC tuning.

What you’re reading is my effort to dig deeper into the topic and broaden my knowledge, and share my results with you.

So, feel free to point out any inaccuracies or errors!And if you’re more interested GC performance, I recommend the following article: Understanding JVM Garbage Collector Performance

Memory Management and Object Allocation in the JVM

The process of garbage collection describes the automatic reclamation of no longer used memory. Before diving right into how it works and the different implementations, it’s essential to understand more about how Java manages memory in general.

The JVM divides memory into several regions, with the following two being the most prominent: the heap and the stack.

Heap Memory: Where Objects Live

The heap is where all objects are stored when they are created using the new keyword.

It’s shared across all threads, making it the main area for dynamic memory allocation.

When a class instance is created, it resides on the heap until it is no longer reachable; at this point, it’s garbage collected. Reachability is defined by the accessibility of an object, either directly or indirectly, via references such as local variables, static fields, or method parameters.

There are scenarios where the JVM allocates objects on the stack instead of the heap.

However, these are rare highly-specific optimizations, depending on the used JVM and its escape analysis capabilities.

As the stack isn’t managed by the garbage collector, these objects aren’t freed via GC but are automatically freed when the stack frame is destroyed.

The heap follows the generational hypothesis, which states:

- Most objects die young

- Few objects live long

- Young objects are most likely to reference other young ones

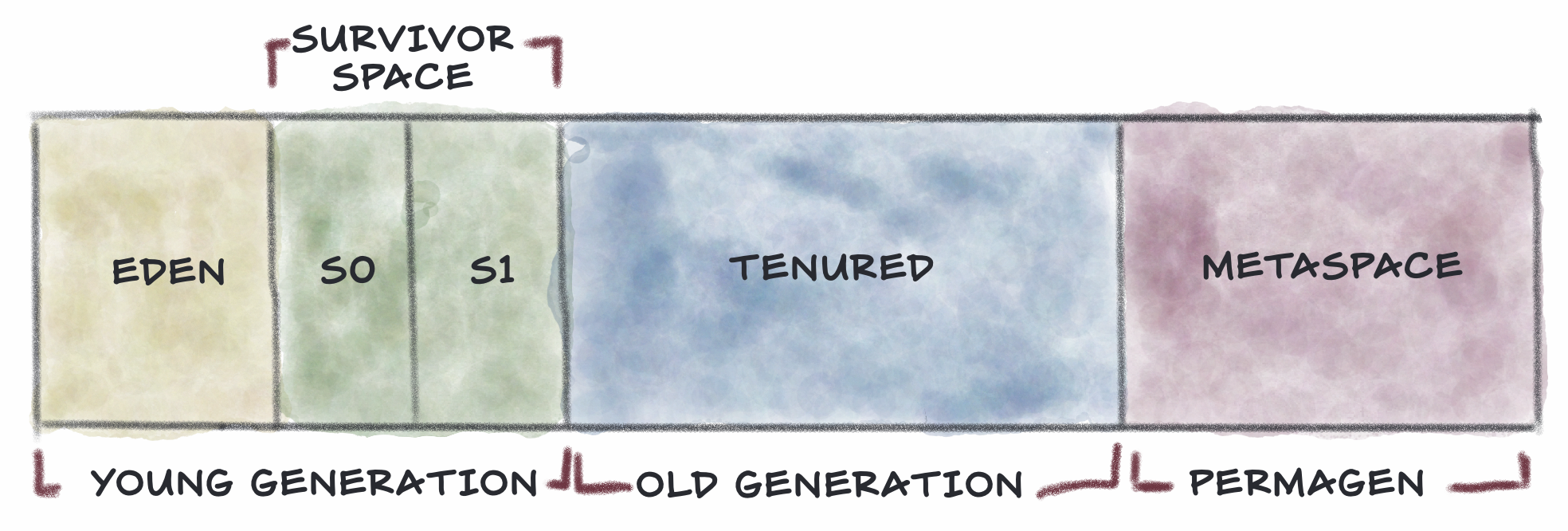

Based on these assumptions, the heap is divided into different regions.

The Young Generation is the region where most newly created objects are allocated initially. It is further subdivided into:

Eden Space

Most new objects are allocated here. This space is frequently cleared during minor garbage collection events.Survivor Spaces (

S0andS1) Objects that survive a minor garbage collection in the Eden space are moved here. Survivor spaces help segregate objects still in use from those that need garbage collection.

When the Eden Space runs into memory pressure—meaning it’s running low—a minor GC run is initiated. Young objects that are no longer reachable are marked to be collected.

Objects that are still reachable are moved to a Survivor Space, and their “age” increases, for evaluation in another cycle.

The Old Generation (Tenured) space contains long-lived objects that survived many rounds of minor GC cycles. Checking and reclaiming these objects is part of a major GC event, which usually takes longer and is more noticeable than a minor one.

Java 8 also introduced a special area known as Metaspace, replacing the Permanent Generation (PermGen) used in the previous JVM version. Here, the JVM stores metadata about classes and methods being executed. This change was made to address several limitations and improve memory management for class metadata

Where PermGen was fixed in size on the heap, Metaspace can resize itself dynamically, as it’s a native memory region outside the heap.

The JVM automatically manages the size depending on the application’s needs, but it can be restricted with the flag -XX:MaxMetaspaceSize.

Metaspace space is checked during major GC cycles, and is more efficient to GC than PermaGen was.

Stack Memory: Where Method Calls and Variables Live

While the heap is used for dynamic memory (objects), the stack is dedicated to managing method calls and their local execution contexts. Unlike the heap, each thread has its own stack.

The stack is small by design and predictable in size.

Conceptually, it’s a “tower” that follows the last-in, first-out (LIFO) principle, with each floor representing a stack frame.

The JVM automatically allocates a stack frame for each method call, containing:

Local variables and method parameters

Primitives live directly in the stack, while objects are referenced from the heap.Operands stack

Certain Bytecode instructions create intermediate results, and these are held here.Return address

After completing the method, the JVM must know where to return next.

As each method call occurs, a new stack frame is pushed onto the thread’s call stack. After the method finishes, its stack frame is popped off, and the control returns to the calling method.

This is why stack memory is limited: each thread’s stack grows with every method call and shrinks as methods return.However, the small footprint ensures high efficiency.

The Role of Garbage Collection

Garbage collection is a cornerstone of the JVM’s memory management strategy, as it’s designed to make the best use of the limited system resources. By automating the reclamation process, GC plays several critical roles, including:

- Prevention of memory leaks

- Optimizing memory utilization

- Simplifying development

- Adaptability to workloads

While highly beneficial in many ways, this isn’t coming without trade-offs.

Identifying garbage is done periodically, either triggered by certain memory thresholds, or manually by calling System.gc(), although the latter is usually discouraged.

During garbage collection, the JVM scans the heap for objects that are still reachable and marks any objects no longer referenced for removal.

While bytecode execution and garbage collection run in parallel, they affect each other, especially in terms of performance. A so-called GC pause or stop-the-world event can occur, during which the JVM temporarily suspends the execution of all threads to let the GC do its job.

In well-optimized systems, these pauses are minimal and often noticeable. However, in performance-critical applications like web servers, gaming engines, or financial systems, managing these pauses efficiently or keeping them at a minimum is crucial.

Minor GC cycles are fast and usually go unnoticed. Major GC, however, are quite the opposite, as all live objects need to be checked, most likely making the application unresponsive during GC duration.

This is why there are multiple GCs with different approaches and priorities.

Modern GCs, like ZGC, optimize for different scenarios to minimize stop-the-world events and are definitely worth checking out if the default GC doesn’t meet your requirements.

The Garbage Collectors of the JVM

Several garbage collectors with different algorithms and priorities are available, each optimized for various workloads.

They are enabled by setting specific JVM flags.

Serial Garbage Collector

The Serial GC is the simplest one in the JVM.

Its single-threaded approach is lightweight and has minimal overhead. However, it can also cause noticeable pauses, especially in larger applications with many objects.

This collector best suits single-threaded applications or resource-constrained environments, such as embedded systems or command line tools.

JVM flag: -XX:+UseSerialGC

Parallel Garbage Collector (Throughput Collector)

The Parallel GC, a.k.a the Throughput Collector, uses multiple threads to do its duty.

Throughput is maximized by distributing the workload across multiple CPU cores. The trade-off, however, is that the application still experiences GC pauses as GC threads take over CPU resources during the process.

It is most suitable for applications where throughput (i.e., completing tasks as quickly as possible) is more important than minimizing GC pauses. This collector is commonly used in server-side applications where maximum CPU utilization is critical.

JVM flag: -XX:+UseParallelGC

G1 Garbage Collector (Garbage First)

G1 GC is a modern, region-based garbage collector designed to replace the Parallel GC in many scenarios. It focuses on low latency and aims to balance high throughput with minimal pause times.

The heap is divided into equally sized regions, and the GC prioritizes those that contain the most garbage, as the name suggests.

This way, the collection is done in shorter bursts, minimizing longer GC pauses. It is also configurable to meet specific pause time goals, meaning we can specify how long we’re willing to let it pause the JVM, and G1 tries to meet this goal while maximizing efficiency.

Because of its flexibility, G1 is the default GC in many JDK variants.

JVM flag: -XX:+UseG1GC

Z Garbage Collector (ZGC)

ZGC is engineered specifically for ultra-low latency with minimal pauses, even for heap sizes in the terabyte range.

It performs most of its work concurrently with Bytecode execution, minimizing stop-the-world events to a few milliseconds. We’re talking about pause times consistently under 10ms for heap sizes up to 16 TB!

The trade-off for this speed is higher CPU utilization.

This is particularly useful where responsiveness is critical, such as in real-time applications. It achieves this by spreading its work over time rather than doing more extensive, disruptive collections.

First deemed production-ready in Java 15, ZGC became a generational GC, to improve its performance further. This was another milestone, as benchmarks showing incredible results.

For example, Apache Cassandra benchmarked 4x throughput with 75% less heap and sub 1ms GC pauses… that’s incredible! Sure, it all depends on the actual requirements and use case of an application, but it shows that there’s still room to grow in the GC space.

JVM flag: -XX:+UseZGC -XX:+ZGenerational

More information cann be found in this JVMLS 2003 talk: Generational ZGC and Beyond

Shenandoah GC

The Shenandoah GC is another low-latency GC that aims to minimize pauses by performing concurrent compaction.

Over time, allocating and deallocating objects on the heap leads to fragmentation, meaning free memory is split into smaller chunks. This might impact the allocation of larger objects, even if there is enough free memory available overall.

To mitigate, live objects are moved together to eliminate fragmentation.

Unlike traditional compacting GCs, Shenandoah performs compaction concurrently with the application’s threads, avoiding long stop-the-world pauses.

As with ZGC, this comes at the cost of higher memory and CPU overhead compared to simpler garbage collectors. This makes it a good fit for real-time and latency-sensitive applications that require predictable behavior.

JVM flag: +XX:+UseShenandoahGC

Epsilon GC

This one is the odd one out; it does nothing.

It’s a no-op GC that doesn’t reclaim any memory, as its primary purpose is to serve as a benchmark for GC overhead and to validate application memory behavior in a controlled environment.

Other use cases are applications with such a huge heap but limited runtime memory requirements that reclaiming memory can be totally ignored to squeeze out the last drop of performance. Or really short-lived applications requiring little memory, or applications where GC overhead is an unacceptable trade-off.

JVM flag: +XX:+UseEpsilonGC

Here’s an anecdote about leaking memory in missile software: An amusing story about a practical use of the null garbage collector (Microsoft Dev Blogs)

The Right GC for You

Most applications will perform well with the default GC without any additional tuning. However, it’s still a good idea to know what to look for if you need to choose a more suitable GC.

| GC Type | Pause Time | Throughput | Heap Size | Best for |

|---|---|---|---|---|

| Serial | High | Moderate | Small | Single-threaded apps |

| Parallel | Moderate | High | Medium to Large | High-throughput |

| G1 | Low | High | Large | Balanced workloads |

| ZGC | Extra low | Moderate | Massive | Latency-sensitive apps |

| Shenandoah | Extra low | Moderate | Large | Real-time apps |

| Epsilon | None | High | n/a | Benchmarking/Testing |

As always, choosing such an essential part of your application’s lifecycle requires an informed and deliberate decision! There really needs to be a hard requirement to switch GCs or even tune the default one.

Even More Options

Other GC are available, like C4 Pauseless GC in the commercial Azul Platform Prime, their premium JVM.

While diving deeper into all the other available options would provide more valuable insights, it’s beyond the scope of this already lengthy article.

Best Practices for Reducing Garbage

Instead of improving garbage disposal, we should first try to reduce the overall amount of garbage in the first place.

Minimize Object Creation

- Reuse objects whenever possible, like using

StringBuilderinstead of manualStringconcatenation - Use the Object pool pattern for expensive but reusable objects, like Thread pools or database connection pools

- Prefer primitive types over their corresponding wrapper types

- Avoid intermediate objects, especially in tight loops

Data Structure Efficiency

- Use collections that minimize overhead, such as

ArrayListoverLinkedList. - Immutability, like Records, goes a long way to reduce memory retention.

Clean up after Yourself

- Use

try-with-resourceswhen applicable. - Be cautious with

staticfields or long living collections. - Consider

WeakReferenceorSoftReferenceto allow the GC to reclaim memory earlier.

Finalizing Objects

- Avoid using on (the deprecated)

finalize()and usejava.lang.ref.Cleanerinstead. - More information can be found in inside.java Episode 21.

Measure twice, saw once

- Profilers are used to monitor heap usage and identify object retention patterns.

- Keep an eye on general memory usage to detect early signs of leaks or inefficient memory use.

Tune the Settings

- Adjust heap or stack size to accommodate your application’s need.

- Use JVM flags to tune your chosen GC’s settings, but read the next section first.

Debugging and Analyzing GCs

To make an informed decision on what to tune or which setting to modify, we must first understand why.

The key metrics to analyze are:

Pause Times

Monitor the duration of stop-the-world events, as excessive pauses indicate potential inefficiencies.Collection Frequency

Frequent GC runs indicate a too-small heap or excessive object churn.Heap Utilization

Compare before and after collection memory use to determine the effectiveness of GC cycles.Promotion Rates

Track how often Young Generation objects are promoted to Old Generation.

The best way to gather data is by enabling GC logging. These logs provide valuable insight into the JVM’s memory handling, helping to pinpoint issues.

To enable logging, use the following JVM flags:

-Xlog:gc*:file=gc.log

-XX:PrintGCDetails

-XX:PrintGCDateStampsThere are also different tools that can help:

VisualVM

A lightweight tool for monitoring heap usage and GC in real-time. Allows heap dump analysis to identify leaks and excessive object churn.JDK Mission Control (JMC)

Tool for advanced diagnostics, including GC events, heap usage, profiling memory allocation patterns, and application performance.Eclipse Memory Analyzer Tool (MAT)

Analyzes heap dumps to identify leaks, large object graphs, and circular references. Use MAT to pinpoint classes and objects responsible for excessive memory usage.jmap and jhat The former captures heap dumps from running Java applications, and the latter analyzes them.

YourKit

A commercial option for a CPU and memory profiler, including flame graphs, database queries and web requests, and more.

A sensible approach to troubleshooting memory and GC problems consists of different steps we can take:

- Check GC logs for anomalies, like sudden increases of pause times or frequent full GC runs.

- Analyze live objects in the heap to find the most prominent offenders.

- If nothing helps, and you still run out of memory, increase the heap in small increments to give the runtime more breathing room while continuing to analyze the root cause.

- Test alternative GCs more fit the requirements.

Tuning Memory and the Garbage Truck

Modifying the memory settings of the JVM can significantly enhance the performance and responsiveness of an application. However, changing any default settings without analyzing an application’s behavior and understanding its memory usage patterns and requirements can result in the opposite of the desired goal.

Numerous options are available, so I list only the most common ones to avoid making this article even longer. You can find all the available performance options here.

Optimizing Heap Allocation

The heap has an initial and a maximum size:

-Xms<size>: initial heap size

-Xmx<size>: maximum heap size

The <size> value is numeric, plus the first character of the unit is directly concatenated onto the flag.

For example, a heap that’s supposed to start at 2 GB and grow up to 8 GB would be:

-Xms1g -Xmx4g

A too small heap can lead to frequent GC cycles and increased pause times and potentially causing OutOfMemoryError crashes if the application exceeds the available memory.

On the other hand, it is also vital to avoid over-allocating heap memory.

A too large heap reduces the frequency of garbage collection but can result in longer pause times during full GC cycles, as the JVM has more objects to scan and manage. Also, a large heap consumes more system memory, possibly leading to resource contention with other processes running on the same machine.

The key is to find a balanced heap size that matches the application’s memory requirements, minimizing GC pauses while avoiding unnecessary memory consumption. Start with values that reflect the peak memory usage observed during profiling.

If you’re still running out of memory, you can enable an automatic heap dump to better understand the reasons why it happened:

-XX:+HeapDumpOnOutOfMemoryError

-XX:HeapDumpPath=./heapdump.hprofSizing Generations

The Young and Old Generation behavior can be affected to:

-XX:NewSize=<size>: Default size of the Young Generation space-XX:MaxNewSize=<size>: Maximum size of the Young Generation space-XX:SurvivorRatio=<int>: Ratio of eden-to-survivor space

For example, the following values would result in 8 MB for the Eden Space, and 4MB each for the two Survivor Spaces:

-XX:NewSize=16m

-XX:SurvivorRatio=2There also a flag to do the same for the ratio between old and new generation sizes:

-XX:NewRatio=<int>: Ratio of old/new generation sizes

Garbage Collector Settings

Each garbage collector has its own settings, modifying characteristics like acceptable max pause times.

Listing and explaining them all would extend this article even further, so I won’t include them. However, I implore you to read and learn more about them!

HotSpot Virtual Machine Garbage Collection Tuning Guide (Oracle)

ZGC: Configuration & Tuning (OpenJDK)

Shenandoah: Performance Guidelines and Diagnostics (OpenJDK)

Conclusion

Garbage collection is one of Java’s most powerful features, abstracting the complexities of memory management and enabling us to build robust applications without worrying (too much) about leaks and memory problems. However, understanding the nuances is highly valuable and even critical for optimizing performance, especially in high-throughput or latency-sensitive environments.

With various garbage collectors to choose from, tailoring GC behavior to the actual application needs is a breeze. Also, there’s still evolution in the space of garbage collectors, with new GCs like ZGC and Shenandoah setting new benchmarks on latency and throughput.

Using best practices, profiling GC behavior, and appropriate tuning, we can efficiently use memory and deliver consistent performance without fearing dreadful GC pauses.

Resources

Garbage Collection Concepts and Techniques

- Escape Analysis in the HotSpot JIT Compiler

- Object Pool Pattern - Wikipedia

- Why is the finalize() Method Deprecated in Java 9?

- java.lang.ref.Cleaner Documentation

- Inside.java Podcast Episode 21: Finalization and Cleaners

Garbage Collectors in Java

- Looking at Java 21: Generational ZGC

- Z Garbage Collector (ZGC) Documentation

- Generational ZGC and Beyond - JVMLS 2023 Talk

- Shenandoah GC Documentation

- Epsilon GC: The JDK’s Do-Nothing Garbage Collector

- JEP 318: Epsilon GC - A No-Op Garbage Collector

Performance Tuning and Diagnostics

- HotSpot Virtual Machine Garbage Collection Tuning Guide

- ZGC Configuration & Tuning Guide

- Shenandoah GC Performance Guidelines and Diagnostics

- JVM Performance Tuning Options

- Mill Build Blog: Garbage Collector Performance