What Happens After We Hit Compile in Java?

How Bytecode Powers Portability and Performance

When writing Java code, our primary focus is the classes, methods, and best coding practices. However, once we hit “compile,” the source code undergoes quite a transformation before execution.

Table of Contents

Compilation transforms source code into bytecode, an intermediate representation and universal language of the Java Virtual Machine (JVM). While most developers rarely interact with bytecode directly, reading and understanding its structure and purpose can provide valuable insights into performance, debugging, and how Java and the JVM work under the hood.

This article is ideal for seasoned Java developers curious about the JVM or anyone looking to better understand Java’s inner workings. We’ll take a closer look at Java bytecode, exploring what happens after compilation, how to read bytecode instructions, and understanding how it all ties together within the JVM.

What is Java Bytecode?

In the mid-1990s, Java’s creators sought a way to make software development platform-agnostic, addressing the challenges of writing portable applications. Bytecode became the linchpin for this vision, acting as a “universal machine code,” the lingua franca that powers the JVM, regardless of hardware or operating system.

Instead of directly generating machine-specific instructions like most compiled languages (e.g., C, Go, or Rust), the Java compiler generates an intermediate representation, bytecode, stored in .class files.

The JVM, specific to each platform, takes care of interpreting, running, or compiling this bytecode into machine-specific instructions as needed, making it highly portable.

It bridges high-level, human-readable Java code and low-level machine code, maintaining Java’s “write once, run anywhere” promise.

This approach of using an IR isn’t unique to the Java ecosystem. The two other most well-known IRs are:

- Common Language Runtime (CLR) in .NET

- LLVM IR created by Clang and other LLVM frontends

Not all IRs prioritize the same aspects. For example, LLVM IR often targets performance and optimization, whereas JVM bytecode prioritizes portability and safety.

The portability is also not restricted to Java alone. Any language that compiles down to bytecode fits nicely into the overall ecosystem and can be run on the JVM, regardless of the underlying operating system or hardware.

From Java to Bytecode

The journey from Java source code to bytecode starts with the compilation process, where a .java file is turned into a .class file containing the bytecode.

Take this simple “hello world” program as an example:

public class HelloWorld {

public static void main(String[] args) {

System.out.println("Hello, Bytecode!");

}

}Compiling the source code with javac HellowWorld.java creates a HelloWorld.class file we can examine further with javap -c HelloWorld:

Compiled from "HelloWorld.java"

public class HelloWorld {

public HelloWorld();

Code:

0: aload_0

1: invokespecial #1 // Method java/lang/Object." <init>":()V

4: return

public static void main(java.lang.String[]);

Code:

0: getstatic #2 // Field java/lang/System.out:Ljava/io/PrintStream;

3: ldc #3 // String Hello, Bytecode!

5: invokevirtual #4 // Method java/io/PrintStream.println:(Ljava/lang/String;)V

8: return

}The output shows the Bytecode instructions generated for the class with some additional context, like showing the constants behind the # indices.

The first part is the implicit parameter-less constructor for HelloWorld generated by the compiler.

The second part is the main method, which calls the println method on the static field System.out with the "Hello, Bytecode!".

To better understand the composition of class files, we need to dissect them a little further.

Anatomy of Class Files

The resulting HelloWord.class file generated during compilation is more than just a bunch of Bytecode instructions.

In fact, it’s a well-defined, strongly-type tree-like structure. It contains both the bytecode and the required metadata to form a blueprint for the JVM to execute it.

The following sections are usually present in class files:

Magic Number

The first four bytes in any class file is0xCAFEBABE, which identifies the file as a valid class file.

There’s no official account of why these particular hex codes were chosen; however, the coffee theme made it a good choice for its use case.Constant Pool

Contains all constants like class and method names,Stringliterals, and references to type descriptors/method signatures, etc.Access Flags

Defines the class aspublic,abstract, orfinal.Fields

Information about class fields.Methods

Bytecode for each method, including constructors.Attributes

Additional metadata and debugging information, enriching the JVM’s understanding of the class structure.

All these parts (and more) are needed to build a complete picture of a Class.

The full specification can be found in JVM Spec chapter 4.

How Bytecode Enables New Features

Java bytecode is more than just a low-level representation of source code; it’s the enabler of many powerful Java features.

The embedded metadata about classes, methods, etc., into a structured and well-defined format, allows the JVM to provide runtime capabilities that extend far beyond what static languages typically offer.

One of the most powerful features enabled by bytecode is reflection.

When writing Java code, we rely on high-level constructs like types, methods, and fields. The compiler encodes all these constructs, along with their metadata (names, signatures, modifiers, etc.), into class files, giving the JVM the power of introspection at runtime.

For example, frameworks like Spring or Hibernate rely heavily on reflection. When Spring creates a bean, it uses reflection to discover constructors, annotations, and method signatures to decide how to instantiate and configure the object. Without the detailed metadata stored in bytecode, this level of dynamism wouldn’t be possible.

Another feature uniquely enabled by bytecode is dynamic proxies.

A dynamic proxy is a class generated at runtime that implements one or more interfaces and can intercept method calls.

This feature, provided by the java.lang.reflect.Proxy type, gives frameworks the power to add behaviors like logging, transaction management, or security checks without modifying the underlying source code.

MyInterface proxy = (MyInterface) Proxy.newProxyInstance(

MyInterface.class.getClassLoader(),

new Class<?>[]{MyInterface.class},

(proxy, method, args) -> {

System.out.println("Intercepted method: " + method.getName());

return method.invoke(originalObject, args);

}

);Under the hood, the JVM generates a new class by manipulating bytecode to implement the specified interfaces.

The bytecode-enabled features don’t end here, but there are too numerous to list in detail here, so here’s a short list:

- Annotation Processing

- Lambdas via the

invokedynamicinstruction - Custom class loaders for sandboxing or hot reloading

Bytecode Execution in the JVM

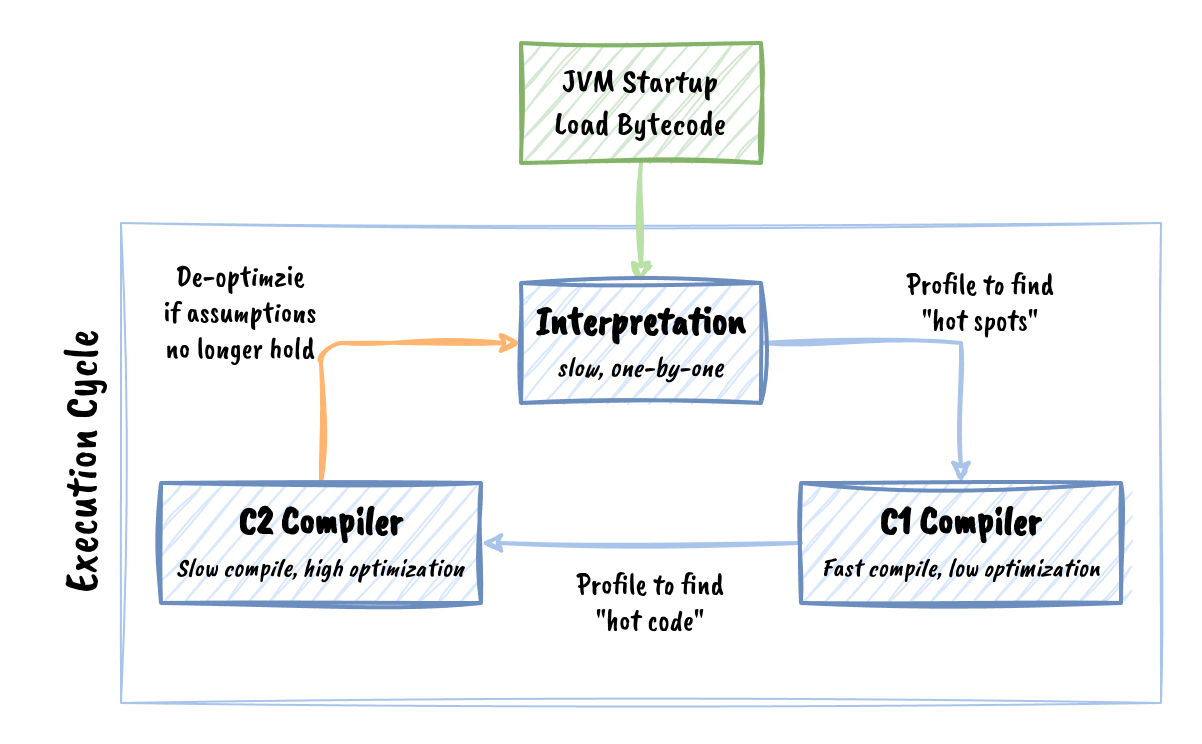

The JVM executes bytecode in two phases:

- Interpretation: Bytecode is executed instruction by instruction.

- JIT compilation: Frequently executed (“hot”) code paths are compiled into native machine code for improved performance.

At first, the instructions are interpreted one at a time. This approach improves startup speed but is slower overall compared to native compilation.

To boost performance, the JVM monitors execution paths through the bytecode to determine so-called “hot paths,” which are then compiled “just-in-time” (JIT) to native machine instructions. Any subsequent calls will execute these native instructions instead of interpreting Bytecode instructions.

This two-tiered approach is a key feature for executing Java code with near-native performance while retaining platform independence.

JIT Compilation Modes

The JIT compilation is also two-tiered, depending on which optimizations are performed.

C1, the client compiler, performs basic optimizations which are lightweight and fast. It improves startup times and overall responsiveness and focuses on low compilation overhead. The trade-off, though, is only moderate performance improvements. Still, it quickly generates reasonably optimized native code to improve performance early in an application’s lifecycle.

C2, the server compiler, uses a more aggressive approach, including optimizations like loop unrolling, inline methods, escape analysis, and more. This mode mainly affects long-running applications like web applications, where achieving peak performance is more critical. The downside, however, is that this requires “warmup time” of the application running to apply more time-intensive optimizations to reach peak performance.

The JVM doesn’t blindly optimize bytecode but rather does its own runtime profiling to make informed decisions about which code paths would benefit the most from specific techniques.

For instance, if a method is usually called with arguments that fit specific patterns (e.g., based on branch predication or type speculation), the JVM optimizes the bytecode based on these assumptions. If the pattern changes, the JVM can undo the optimization, reverting the method to its original form and applying a different optimization better suited to the new usage pattern.

De-Optimization

Although the JIT compilation provides substantial performance improvements, it’s important to note that it’s not a one-way street. The JVM can also de-optimize code when the assumptions made during JIT compilation no longer hold.

For example, let’s say the JVM optimizes a method based on the assumption that it always returns a specific type or behaves in a predictable manner (e.g., inlining a method call or removing type checks based on profiling data). If, during runtime, the method’s behavior changes—for instance, encountering a different input type or returning unexpected results—the JVM can de-optimize the code.

It does this by discarding the previously compiled machine code and reverting to interpreting the bytecode until it gathers enough data to apply a new optimization strategy suited to the changed circumstances.

Here’s the whole execution cycle of bytecode to machine code and back:

This flexibility ensures that the JVM remains responsive and adaptable to changing runtime conditions, maintaining correctness while striving for optimal performance.

JIT Vs AOT

While JIT works at runtime, another approach known as Ahead-of-Time (AOT) compilation can be employed to compile Java bytecode into native machine code directly.

AOT eliminates the need for runtime compilation, reducing startup time. However, AOT is, by definition, a static process. Pre-compilation sacrifices hotspot detection and the dynamic optimizations that JIT would make based on real-time profiling data.

Even though the application starts with “full speed,” the peak performance is most likely less than what a JIT could deliver.

Also, a native binary is created, losing platform independence in the process.

JIT compilation adapts to actual usage patterns, whereas AOT relies on static analysis of the code.

In recent years, tools like GraalVM have become more widespread, offering a hybrid approach that leverages both JIT and AOT compilation, providing a balance between quick startup times and long-term performance.

There are also ways to alleviate the performance penalty with “profile-guided optimization” (PGO), but this is out of the scope of this article. To know more about it, check out the GraalVM documentation.

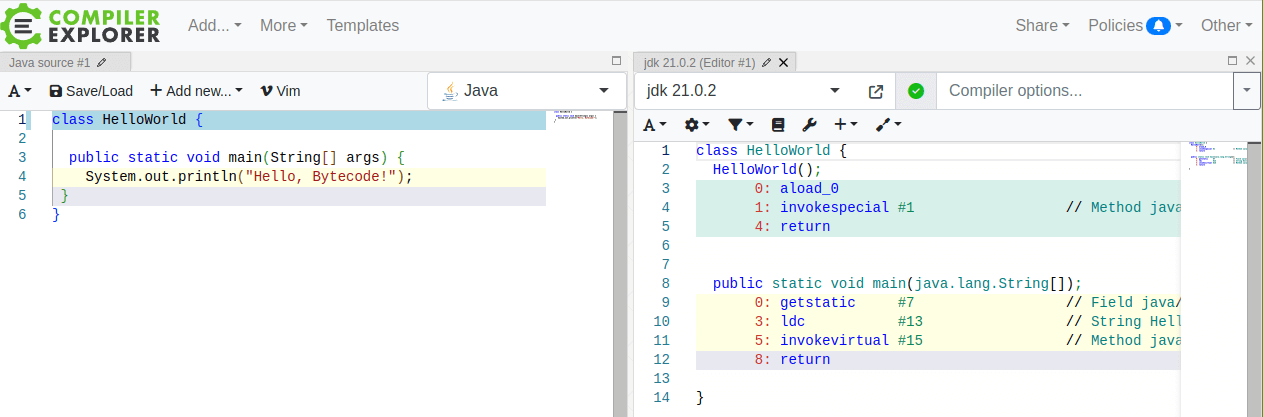

Tools for Reading Classes

To actually read bytecode, we need tools to show us the instructions stored in class files in a sensible manner.

Each tool prepares the bytecode differently to give us more context to map instructions back to the corresponding Java source code.

The Included One: javap

The simplest one included in the JDK is javap, which we’ve already seen earlier in the article.

The -c flag disassembles the class file and shows the instructions, and -v outputs a more verbose variant, including the constant pool and more.

Check all the available options by simply calling javap without any further arguments.

IntelliJ

IntelliJ has a Bytecode viewer found under “View → View Bytecode.”

Eclipse

The “Bytecode” view can be activated under “Window → Show View → Other” and then search for “Bytecode.”

Be aware that the view might initially be “ASMified,” showing ASM code instead of the raw instructions. Check the view’s icons on the top-right to switch between different modes.

The Playground: Compiler Explorer

If we just want to play around or analyze a piece of code in isolation, the “Compiler Explorer” found at godbolt.org is fantastic for that!

It supports many languages, including Java, and compiles source code to its bytecode in a two-pane editor.

The editors are linked by color, and hovering a line highlights the corresponding part on the other side. Also, most bytecode instruction has a tooltip with documentation, as do constant pool indices.

Be aware, though, that a package-private class is needed, as public, private or protected are not supported.

JITWatch

JITWatch is an open-source tool that helps to analyze the decisions made by the JIT compiler.

The JVM flags -XX:+UnlockDiagnosticVMOptions and -XX:+LogCompilation output the JIT log files, and JITWatch parses and visualizes them for us to gain valuable insight into what’s happening behind the scenes.

This helps to understand the JVM’s behavior and identify unexpected bottlenecks or suboptimal JIT decisions that could impact overall performance.

Common Bytecode Instructions

Knowing the most commonly used instructions can give more insights into how the JVM executes Java code and can be helpful in optimization, debugging, and advanced performance tuning.

| Category | Instruction | Description |

|---|---|---|

| Control Flow | tableswitch | switch jump table |

lookupswitch | switch lookup table | |

goto | Unconditional jump to an offset | |

if_icmpeq/if_icmpne | Branching based on integer comparison | |

ifeq, ifne | Branching based on comparison with 0 | |

| Arithmetic | iadd, isub | Add/subtract two integers from the operand stack |

imul, idiv | Multiply/divide two integers from the operand stack | |

iinc | Increment a local variable by a constant value | |

| Stack Operations | nop | No operation |

dup | Duplicate the top value | |

pop | Remove the top value | |

swap | Swap the top two values | |

| Variable Access | aload | Load a reference onto the stack from a local variable |

astore | Store a reference from the stack into a local variable | |

iload | Load an integer from a local variable onto the stack | |

istore | Store an integer from the stack into a local variable | |

| Constants | ldc | Load a value from the constant pool |

bipush | Push a single-byte integer onto the stack | |

sipush | Push a short-integer onto the stack | |

aconst_null | Pushes a null reference onto the stack | |

| Method Invocation | invokevirtual | Call a virtual (instance) method |

invokestatic | Call a static method | |

invokespecial | Call a private or superclass method, or constructor | |

invokedynamic | Dynamically resolve method calls at runtime | |

| Memory Management | new | Allocate memory for an object |

dup | Duplicate a reference for use in constructors | |

monitorenter | Acquire a monitor (used for synchronized blocks) | |

monitorexit | Release a monitor | |

| Exceptions Handling | athrow | Throw an exception |

These are not all the available 201 Bytecode instructions, although some are just variations, like iload_<n>.

The complete list of instructions is available in chapter 6 of the JVM Specification.

Hidden Gems and Bytecode Tricks

While some instructions, like aload or invokevirtual, are staples of everyday Java programs, others are more specialized and offer unique capabilities that can unlock the ways to interact with the JVM and create exciting features.

We’ll take a close look at the following instructions:

invokedynamic: Dynamic Method Bindingnop: Doing nothingtableswitchandlookupswitch: Branching withswitchiinc: Increment Efficiency

These hidden gems are especially benefical for those working on advanced topics like performance tuning, bytecode manipulation, or custom JVM languages.

Dynamic Features for Everyone

The star of the show has to be invokedynamic.

Introduced in Java 7, it’s one of the most groundbreaking additions to the JVM.

Unlike the other invoke... instructions, it defers binding the method call to its actual target to runtime.

This might just sound like lazy loading, but it enables a more dynamic approach to the language overall.

The most prominent Java feature using this instruction is lambda expressions, which leverage the instruction for efficient and flexible runtime implementation.

More than just a feature, even languages like Groovy or JRuby are using the instruction to implement dynamic typing and features.

Doing Nothing

The nop (no operation) instruction doesn’t perform any action when executed, making it seemingly useless at first glance.

However, it still has essential use cases, like acting as a placeholder during bytecode manipulation or instrumentation, to ensure that offsets remain consistent.

Another use case is debugging, where nop can help isolate problematic code by replacing other instructions.

Branching Efficiency

Branching is a critical aspect of program control flow, so having specialized instructions is a must-have.

switch-based branching has two dedicated instructions:

tableswitchlookupswitch

If the cases are a small range of contiguous integer values, the tableswitch instruction creates a simple jump table to map each case value to its corresponding branch offset.

This is a highly efficient way to do this, as it ensures O(1) complexity, or constant time to access each case.

Its sibling lookupswitch is used for ranges of sparse case values.

Instead of a jump table, the JVM uses a sorted list of key-offset pairs and performs a binary search to find the matching case.

Regarding time complexity, this still means O(1) as the best case.

However, the average and worst-case scenarios are O(log n)

These two instructions are a good example of why knowing how the JVM works under the hood helps us to write more efficient code.

If we have a switch with widely spaced values that could be converted into a smaller range, it may allow the JVM to use tableswitch instead of lookupswitch, reducing time complexity.

Increment Local Variables Efficiently

A neat little trick of the JVM is incrementing a local variable by a constant value with the iinc instruction.

It’s a highly specialized and efficient way to increment (or decrement) the value of a local variable directly without loading and storing values repeatedly on the operand stack.

This efficiency makes it a preferred choice for things like loop counters and simple arithmetic updates in methods. It keeps the bytecode compact, reducing the required overall instructions, which also reduces the class file size.

Understanding Bytecode for Better Performance

These Bytecode instructions, while low-level, provide valuable insight into how Java code is executed by the JVM. Each Java construct (like loops, method calls, and conditionals) is mapped to a combination of these instructions.

The Java compiler performs a variety of optimizations when generating bytecode, but these optimizations are not always optimal for every scenario.

By analyzing the resulting bytecode using tools like javap, we can better understand how source code translates to bytecode and identify potential optimization areas at the source code level instead of solely relying on the compiler and runtime.

The Consequences of Convenience

A classic pitfall, for example, is auto-boxing. The automagically conversion between primitives and their wrapper classes is a convenience feature that might simplify our code and is invaluable in certain scenarios. Still, it can be quite an overhead in others.

Take this loop, for example:

Integer sum = 0;

for (int i = 0; i < 100; i++) {

sum += i;

}I know that’s not necessarily everyday code, but it’s for illustrating purposes.

The resulting bytecode shows calls to Integer.valueOf (14) and Integer.intValue (19) in the loop body:

0: iconst_0

1: invokestatic #7 // Method java/lang/Integer.valueOf:(I)Ljava/lang/Integer;

4: astore_1

5: iconst_0

6: istore_2

// LOOP HEADER

7: iload_2

8: bipush 100

10: if_icmpge 29

// LOOP BODY START

13: aload_1

14: invokevirtual #13 // Method java/lang/Integer.intValue:()I

17: iload_2

18: iadd

19: invokestatic #7 // Method java/lang/Integer.valueOf:(I)Ljava/lang/Integer;

22: astore_1

// LOOP BODY END

23: iinc 2, 1

26: goto 7By a primitive int, we could save 200 instructions that trigger additional method calls, which jump away, create stack frames, must return, etc.

Understanding JIT and Hot Code Optimization

Even though the JIT compiler tries its best to optimize hot spots and hot code, certain constructs are harder to optimize effectively than others.

The key optimizations are:

Method Inlining

Calls to small, frequently used methods are replaced with the instructions of that method directly to reduce the overhead of a method call.Loop unrolling

Replaces the iterations for loop control with repeated instructions of the loop body.Escape analysis

If an object is only used within a single thread or method, the JVM might allocate it on the stack instead of the heap, reducing garbage collection.Peephole optimization

Replaces small instruction sequences with more efficient equivalents.

We shouldn’t focus on writing our code in a style to solely make the (JIT) compiler’s work easier. But it doesn’t hurt to write JIT-friendly code when it doesn’t affect the “human-friendliness” too much or if we require highly-optimizable code and peak performance.

These are the four things I would recommend looking for:

Write smaller and isolated methods

Easier to inline, without side effects.Avoid excessive Auto-Boxing

Reduces unnecessary object creation and method calls.Leverage Immutability

Allows the JIT to make stronger assumptions about behavior.Minimize Dynamic Dispatch

Avoid deep inheritance hierarchies where possible, as they complicate method resolution.

These four coding practices are good ideas to follow, even outside the context of bytecode optimization.

Conclusion

Java bytecode is more than an intermediate language—it’s the cornerstone of the JVM’s portability, performance, and extensibility. From enabling dynamic features like lambdas and proxies to powering optimizations via the JIT compiler, bytecode plays a vital role in modern Java development.

Understanding Java bytecode is like peering under the hood of the JVM—a chance to see the engine that powers your code. By dissecting class files and exploring JIT compilation, we can:

- gain deeper insights into the Java compiler and JVM behavior

- make more informed decisions about our code and optimize for better runtime performance

- debug complex issues that might not be apparent at source code level

Moreover, bytecode forms the foundation for advanced Java features but is also the backbone for a wide range of languages like Kotlin, Scala, and Groovy, demonstrating its versatility and adaptability.

Whether you’re debugging, optimizing, or simply exploring the JVM, understanding bytecode equips you with a powerful toolset. It’s not just about knowing what your code does—it’s about knowing how it does it.